Blog

Retrieval optimizer: Custom data

In the previous blog posts on grid search and bayesian optimization with the retrieval optimizer we made things easy by pulling pre-defined and formatted data. However, let’s say you have custom data in a specific schema with a particular way of querying that you want to test, but it doesn’t fit under the pre-defined functions within the retrieval optimizer. What then? Luckily, the library was designed around this need and makes it very straightforward to define your own processing and search function to be used with the application.

In this blog post, we'll walk through how to define a custom corpus_processor function to transform raw source data into a format that can be efficiently indexed by Redis. We'll also demonstrate how to implement custom search_methods tailored to the specific fields within the dataset, allowing for more flexible and targeted querying. The dataset used in this example consists of text chunks and embeddings derived from car manuals for two different types of vehicles.

Custom data example

📓A complete code notebook example is available here.

Corpus

In the previous examples, the corpus data was defined as a large dictionary and only had fields for text and title. This data, however, is different and contains chunked data from a few different car manuals along with query_metadata.

Queries

In addition to the corpus being different, we can also observe that the queries that we want to execute need to leverage additional data provided within the query_metadata attribute.

Qrels

Qrels, short for query relevance judgments, are structured annotations that map queries (in this case about cars) to documents (e.g., the chunk with id mazda_3:86) with binary or graded relevance labels. They’e a standard evaluation format from the information retrieval community (originating with TREC) used to assess how well a system retrieves relevant results. In this example, we use qrels to define which car parts are relevant to each car, allowing us to evaluate the performance of a retrieval model.

Custom search methods

The retrieval optimizer can use any search method that the user has set up within the framework. It must take a SearchMethodInput and output a SearchMethodOutput (see schema definitions here). The user decides between those two points. You may want to set up many custom query re-writing steps, regex, and/or other ways to get the most out of your search.

The example uses two custom search techniques: one that uses default vector search and one that performs hybrid search. It uses the query_metadata available with the corpus and queries shown above.

Once defined, you can make use of these functions in your study by creating a search_method_map. A search method map is a simple Python dictionary that maps the string provided in the study config to the user-defined function.

Custom corpus processor

Under the hood, the retrieval optimizer creates a Redis search index and populates it with the provided corpus data. For this process to work, the corpus data must be converted into a list of dictionaries that can be effectively indexed.

This function takes two arguments:

- corpus the data to be acted upon

- an embedding model since the retrieval optimizer is based around vector search

Note: the get_embedding_model function available with the redis_retrieval_optimizer utilizes an embedding model dictionary and handles initializing the embedding model for you in a way that is usable in the application.

Define study config

Note:

- The search method strings match those of our custom method map

- We’ve added additional fields that correspond to the data attributes we wish to index based on the results of our corpus processor function

Run the study

Now that we have defined our custom search methods and our corpus processor, we can run a study with our custom data.

Example output

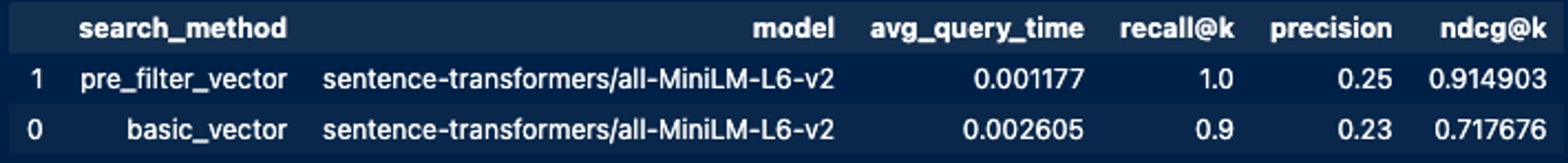

From this simple study we see that we greatly increased our retrieval performance by making use of our custom query_metadata fields.

Next steps

In this example you learned:

- How to define a custom corpus processor to leverage source data of arbitrary format in the retrieval optimizer.

- How to define custom search methods to test specific retrieval methods against each other for your app.

You can find a complete notebook example here.

Get started with Redis today

Speak to a Redis expert and learn more about enterprise-grade Redis today.